[ad_1]

Electronically generated rhythms are often perceived as too artificial. New software now allows producers to make rhythms sound more natural in computer-produced music. Research at the Max Planck Institute for Dynamics and Self-Organization and at Harvard University forms the basis for new and patented methods of electronically generated rhythms according to patterns of musicians following fractal statistical laws.

The process, which produces natural-sounding rhythms, has now been licensed to Mixed In Key LLC, whose music software is used worldwide by leading music producers and internationally renowned DJs. A product called “Human Plugins,” which uses this technology, has now been launched.

Nowadays, music is often produced electronically, i.e., without acoustic instruments. The reason is simple: pieces of music can be easily created and reworked without a recording studio and expensive musical equipment. All that is needed is a computer and a digital audio workstation (DAW), i.e., an electronic device or software for recording, editing and producing music. The desired sound for any software instrument from piano to drums is generated and reworked via the DAW.

Much of the music, however, is produced with quantized, artificial-sounding loops that have no natural groove, and there is no standardized process to humanize them. Too high precision sounds artificial to the human ear. But randomly shifting sounds by a few milliseconds isn’t enough—it just doesn’t sound like a live musician playing this part on a musical instrument.

Without the correct correlation between the time deviations of the beats, these usually sound bad to the human ear, or they are perceived as digital timing errors. The only option was to strive for very precise timing, which characterized the sound of music in the post-90s in almost all genres.

The new “humanizing” method developed at the MPI-DS now makes it possible to add natural variances to electronic rhythms to create a more natural sound experience. A method based on this and developed at Harvard University, called “Group Humanizer,” expands the scope of application to several instruments and makes the temporal deviations in the interplay of different electronic and acoustic instruments sound human, as if different musicians were playing together in the same room.

Mixed In Key has further developed this technique and created a way to humanize audio channels and MIDI notes with a set of “Human Plugins” that are compatible with most major DAWs.

Methodical research on natural variances

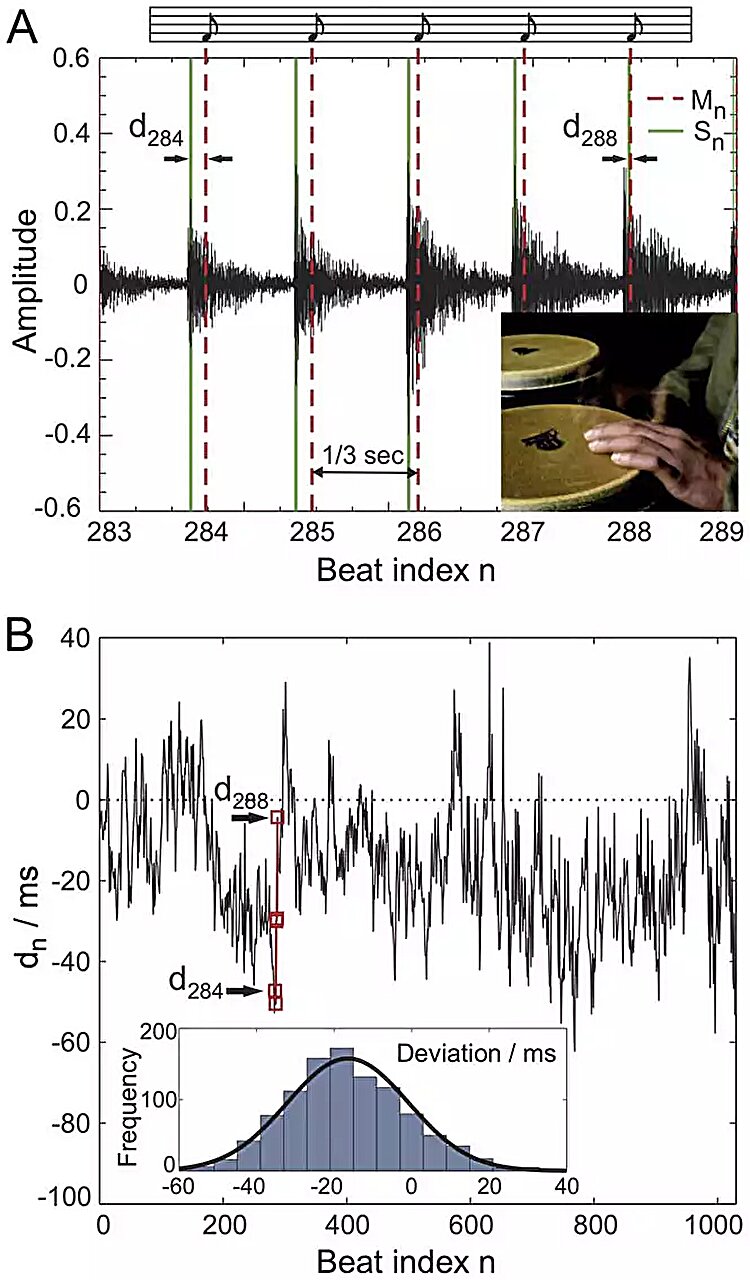

The foundation for the new method was laid by Holger Hennig in 2007, then a scientist at the Max Planck Institute for Dynamics and Self-Organization in Göttingen under the direction of Theo Geisel, director emeritus at the institute. Hennig asked himself whether musicians’ temporal deviations from the exact beat vary purely by chance or follow a certain pattern and what effect this has on the music perception.

The deviations follow a fractal pattern. The term fractal was coined by mathematician Benoit Mandelbrot in 1975 and describes patterns that have a high degree of scale invariance or self-similarity. This is the case when a pattern consists of several small copies of itself. Fractals occur both as geometric figures and as recurring structures in time series, such as the human heartbeat.

The drummer’s rhythmic fluctuations follow statistical dependencies—not just from one beat to the next, but over up to a thousand beats. This results in a pattern of structures and dependencies that repeat over several minutes, known as long-range correlations, which are present on different time scales.

How do these temporal deviations affect the way musicians play together? Results at Harvard University show that the interaction of musicians is also fractal in nature with so-called long-range cross correlations.

“The next time deviation of a musician’s beat depends on the history of the deviations of the other musicians several minutes back. Human musical rhythms are not exact, and the fractal nature of the temporal deviations is part of the natural groove of human music,” says Holger Hennig.

But what do these fractal deviations sound like? A piece of music composed specifically for this research was humanized in post-production. The piece was played to test subjects in different re-edited versions as part of a psychological study at the University of Göttingen: one version with conventional random deviations and another version with fractal deviations. The version with human fractal deviations was preferred by most listeners over conventional random deviations and was perceived as the most natural.

New product for lively and dynamic rhythms

Based on the article “When the beat goes off” in the Harvard Gazette, which caused a stir in the music scene in 2012, the London electronic musician James Holden contacted Hennig, who was then carrying out research at Harvard University. Together they further developed the theoretical foundations into a plugin written by James Holden for the software Ableton Live used worldwide.

The so-called “Group Humanizer,” which James Holden uses in his live shows and albums, focuses on the interaction of several MIDI tracks. Various computer-generated parts react to each other’s temporal deviations and sound as if the instruments were being played by musicians performing together. The mutual exchange of information creates a stochastic-fractal connection and thus a natural musical movement, as if the recordings, which were generated separately, had been recorded together.

Moreover, it is now possible that electronic instruments adapt in a natural manner in real-time to the performance of musicians. “The ability to integrate synthetic and human sound sources in a coherent manner that the Humanizer software has unlocked has let me access a new aesthetic in electronic/acoustic music,” says James Holden.

The Group Humanizer Plugin finally caught the attention of the company Mixed In Key. The company Mixed In Key LLC, based in Miami, U.S., develops and markets software for DJs and music producers, including the “Mixed In Key” suite.

Mixed In Key has now licensed the humanizing patent developed at the MPI by Max Planck Innovation, the technology transfer organization of the Max Planck Society, as well as the Group Humanizer patent developed at Harvard University, and has developed a software plug-in called Human Plugins.

“With the new Human Plugins, the humanization of electronic rhythms is taken to a completely new level. This software has the potential to become the standard in the field of music humanizing,” says Yakov Vorobyev, president and founder of Mixed In Key LLC.

This new plugin can be integrated into all common DAWs such as Ableton Live, Logic Pro X, FL Studio, Pro Tools and Cubase and humanize not only MIDI but also audio and wave audio tracks. The users only add one or several new audio tracks to the DAW, which enables the humanizing of several instruments at a time (e.g., drums, bass, piano).

With the help of a knob, it is possible to determine the strength of humanizing, i.e., the height of the standard deviation of the shifts, which indicates the width of the Gaussian distribution determined by the researchers at the MPI-DS. In this way, a certain feel of rhythm can also be created depending on the style of music.

“The basic research into fractals and their application in psychoacoustics has created a completely new area of research and the basis for a product with great economic potential that gives musicians completely new options for presenting their music. We are pleased that we were able to win Mixed In Key LLC, one of the most important companies in the music business, as a partner to ensure the worldwide distribution of this fascinating music post-production process,” says Dr. Bernd Ctortecka, patent and license manager at Max Planck Innovation.

Max Planck Society

Citation:

Electronic music with a human rhythm (2024, February 5)

retrieved 7 February 2024

from https://techxplore.com/news/2024-02-electronic-music-human-rhythm.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

[ad_2]