[ad_1]

A new system that brings together real-world sensing and virtual reality would make it easier for building maintenance personnel to identify and fix issues in commercial buildings that are in operation. The system was developed by computer scientists at the University of California San Diego and Carnegie Mellon University.

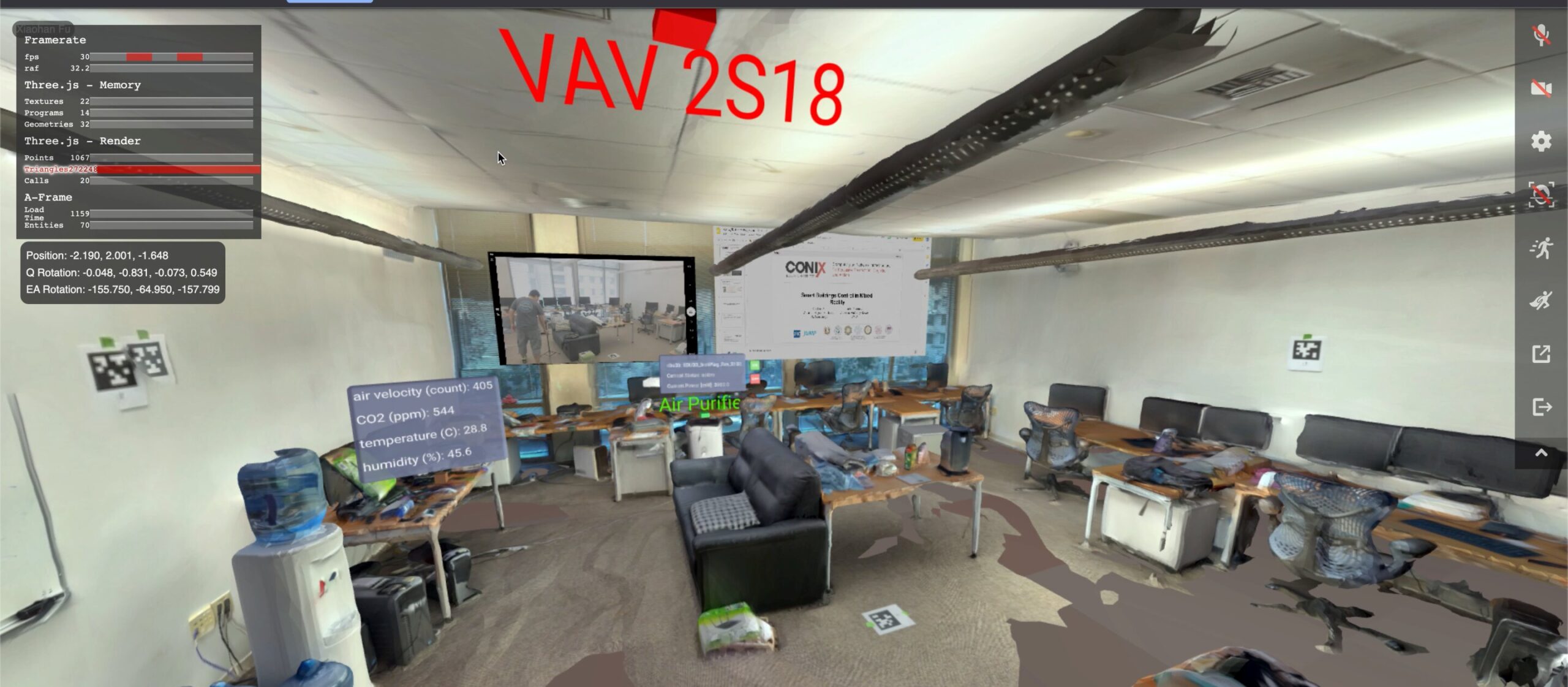

The system, dubbed BRICK, consists of a handheld device equipped with a suite of sensors to monitor temperature, CO2 and airflow. It is also equipped with a virtual reality environment that has access to the sensor data and metadata in a specific building while being connected to the building’s electronic control system.

The team presented their work at the BuildSys 23 Conference on Nov. 15 and 16 in Istanbul, Turkey. It has been published in the conference proceedings.

When an issue is reported in a specific location, a building manager can go on-site with the device and quickly scan the space with the Lidar tool on their smartphone, creating a virtual reality version of the space. The scanning can also occur ahead of time. Once they open this mixed reality recreation of the space on a smartphone or laptop, building managers can locate sensors, as well as the data gathered from the handheld device, overlaid onto that mixed reality environment.

The goal is to allow building managers to quickly identify issues by inspecting hardware and gathering and logging relevant data.

“Modern buildings are complex arrangements of multiple systems from climate control, lighting and security to occupant management. BRICK enables their efficient operation, much like a modern computer system,” said Rajesh K. Gupta, one of the paper’s senior authors, director of the UC San Diego Halicioglu Data Science Institute and a professor in the UC San Diego Department of Computer Science and Engineering.

Currently, when building managers receive reports of a problem, they first have to consult the building management database for that specific location. But the system doesn’t tell them where the sensors and hardware are located exactly in that space. So managers have to go to the location, gather more data with cumbersome sensors, then compare that data against the information in the building management system and try to deduce what the issue is. It’s also difficult to log the data gathered at various spatial locations in a precise way.

By contrast, with BRICK, the building manager can directly go to the location equipped with a handheld device and a laptop or smartphone. They will immediately have access on location to all the building management system data, the location of the sensors and the data from the handheld device all overlapping in one mixed reality environment. Using this system, the operators can also detect faults in the building equipment from stuck air-control valves to poorly operating handling systems.

In the future, researchers hope to find CO2, temperature and airflow sensors that can directly connect to a smartphone, to enable occupants to take part in managing local environments as well as to simplify building operations.

A team at Carnegie Mellon built the handheld device. Xiaohan Fu, a computer science Ph.D. student in the research group of Rajesh Gupta, director of the Halicioglu Data Science Institute, built the backend and VR components that build upon their earlier work on BRICK metadata schema that has been adopted by many commercial vendors.

Ensuring that the location used in the VR environment was accurate was a major challenge. GPS is only accurate to a radius of about a meter. In this case, the system needs to be accurate within a few inches. The researchers’ solution was to post a (few) AprilTags–similar to QR codes —in every room that would be read by the handheld device’s camera and recalibrate the system to the correct location.

“It’s an intricate system,” Fu said. “The mixed reality itself is not easy to build. From a software standpoint, connecting the building management system, where hardware, sensors and actuators are controlled, was a complex task that requires safety and security guarantees in a commercial environment. Our system architecture enables us to do it in an interactive and programmable way.”

More information:

Xiaohan Fu et al, Debugging Buildings with Mixed Reality, Proceedings of the 10th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation (2023). DOI: 10.1145/3600100.3626258

University of California – San Diego

Citation:

Bringing together real-world sensors and VR to improve building maintenance (2024, January 31)

retrieved 31 January 2024

from https://techxplore.com/news/2024-01-real-world-sensors-vr-maintenance.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

[ad_2]